Last week, a few of us attended ECIR 2011 in Dublin. The conference was a resounding success both in terms of its program and organisation. Compared to last year, the event was very well attended with about 250 delegates registered to the conference and/or its satellite events. The majority of delegates were from Ireland and the United Kingdom.

Workshops

The kick-off was on Monday, with a selection of workshops and tutorials at the fabulous Guinness Storehouse. We attended the Diversity in Document Retrieval (DDR 2011) workshop, jointly organised by Craig Macdonald, Jun Wang, and Charlie Clarke.

The DDR workshop was sometimes a standing-room only event and appeared to be the largest workshop of the conference. It was structured around three broad themes: evaluation, modelling, and applications. Besides good keynotes by Tetsuya Sakai and Alessandro Moschitti, the workshop featured technical and position paper presentations, as well as a poster session and a breakout group discussion on all three workshop themes. While there was no agreement on a possible "killer application" for diversity, there was a consensus that diversity is best described or seen as the lack of context. In addition, a few key points arose across the boundaries of the tackled themes:

- Representing diversity

How to best represent the possible multiple information needs underlying a query? Should this representation reflect the interests of the user population, or should it be itself diverse? - Measuring diversity

What does diversity mean and how should it be promoted in different scenarios? The workshop featured some ideas for applications, including expert search, geographical IR, and graph summarisation. - Unifying diversity

How to diversify across multiple search scenarios (e.g., multiple verticals of a search engine)? How to convey a summary relevant to multiple information needs in a single page of results?

Some of these ideas are currently being investigated as part of the NTCIR-9 Intent task. Charlie was also keen to consider these questions in future incarnations of the diversity task in the TREC Web track. During the workshop, Rodrygo presented our position paper entitled "Diversifying for multiple information needs". The full DDR workshop proceedings are available online.

While we haven't attended it, it was of note that the Information Retrieval Over Query Sessions workshop, which was held at the same time as DDR, also received very good and positive feedback from its attendees.

The workshops were followed by an excellent welcome reception where the least we could say is that Guinness was not in shortage.

Conference

On Tuesday, the main conference took over with a diverse (no pun intended) program. The conference started with a thoughtful keynote by Kalervo Järvelin who urged the information retrieval community to see beyond the [search] box. The keynote led to some very interesting discussions about whether IR is a science or a technology (i.e. mostly about engineering). We would like to believe that it is science, although some delegates argued (sadly) for the opposite.

The second keynote was given by Evgeniy Gabrilovich, winner of this year's KSJ Award. Evgeniy provided a very comprehensive overview of the fascinating computational advertising field, highlighting the current state-of-the-art and possible future research directions. We were encouraged to hear about the Yahoo! Faculty Research and Engagement Program (FREP), which might allow academics to access the necessary datasets to conduct research in a field that has been thus far the sole territory of researchers based in industry.

The last keynote talk was superbly given by Thorsten Joachims about the value of user feedback. Thorsten convincingly argued for the importance of collecting user feedback as an intrinsic part of both the retrieval and learning processes. The talk highlighted how user feedback could improve the quality of retrieval and by how much. We wish that the slides will be made publicly available at some point.

As for the rest of the program, there were two types of papers/presentations: full papers were presented in 30 min, while short papers had only 15 min. As usual, the quality of papers (or at least the presentations) varied from the outstanding to the less good. One suggestion for future ECIR conferences is to limit all the talks to at most 20 min, encouraging conciseness and pushing the speakers to focus on the "message out of the bottle". Indeed, some talks appeared to be exceedingly long with respect to their informative content. While we see the value of giving a 30 min slot to a 10-pages long ACM-style paper, there does not seem to be a valid reason for giving that much time for a (comparatively much shorter) 12-pages LNCS-style paper.

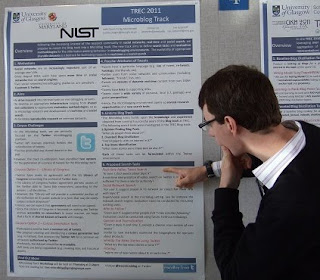

It was interesting to see several Twitter-related papers in the program, suggesting that the community will find the upcoming new TREC 2011 Microblog track and its corresponding shared dataset particularly useful/helpful. The theme of crowdsourcing was also highly featured in the conference, with several papers showing how cheap and reliable relevance assessments could be obtained through the Amazon Mechanical Turk or similar services. Finally, we were very pleased to see many presented papers using our open source Terrier software in their experiments.

Overall, a few papers caught our attention and were particularly interesting:

While we haven't attended it, it was of note that the Information Retrieval Over Query Sessions workshop, which was held at the same time as DDR, also received very good and positive feedback from its attendees.

The workshops were followed by an excellent welcome reception where the least we could say is that Guinness was not in shortage.

Conference

On Tuesday, the main conference took over with a diverse (no pun intended) program. The conference started with a thoughtful keynote by Kalervo Järvelin who urged the information retrieval community to see beyond the [search] box. The keynote led to some very interesting discussions about whether IR is a science or a technology (i.e. mostly about engineering). We would like to believe that it is science, although some delegates argued (sadly) for the opposite.

The second keynote was given by Evgeniy Gabrilovich, winner of this year's KSJ Award. Evgeniy provided a very comprehensive overview of the fascinating computational advertising field, highlighting the current state-of-the-art and possible future research directions. We were encouraged to hear about the Yahoo! Faculty Research and Engagement Program (FREP), which might allow academics to access the necessary datasets to conduct research in a field that has been thus far the sole territory of researchers based in industry.

The last keynote talk was superbly given by Thorsten Joachims about the value of user feedback. Thorsten convincingly argued for the importance of collecting user feedback as an intrinsic part of both the retrieval and learning processes. The talk highlighted how user feedback could improve the quality of retrieval and by how much. We wish that the slides will be made publicly available at some point.

As for the rest of the program, there were two types of papers/presentations: full papers were presented in 30 min, while short papers had only 15 min. As usual, the quality of papers (or at least the presentations) varied from the outstanding to the less good. One suggestion for future ECIR conferences is to limit all the talks to at most 20 min, encouraging conciseness and pushing the speakers to focus on the "message out of the bottle". Indeed, some talks appeared to be exceedingly long with respect to their informative content. While we see the value of giving a 30 min slot to a 10-pages long ACM-style paper, there does not seem to be a valid reason for giving that much time for a (comparatively much shorter) 12-pages LNCS-style paper.

It was interesting to see several Twitter-related papers in the program, suggesting that the community will find the upcoming new TREC 2011 Microblog track and its corresponding shared dataset particularly useful/helpful. The theme of crowdsourcing was also highly featured in the conference, with several papers showing how cheap and reliable relevance assessments could be obtained through the Amazon Mechanical Turk or similar services. Finally, we were very pleased to see many presented papers using our open source Terrier software in their experiments.

Overall, a few papers caught our attention and were particularly interesting:

- On the contributions of topics to system evaluation

Steve Robertson - Caching for realtime search - in our opinion by far the best paper/presentation of the conference

Edward Bortnikov, Ronny Lempel and Kolman Vornovitsky - Are semantically related links effective for retrieval?

Marijn Koolen and Jaap Kamps - A methodology for evaluating aggregated search results - Excellent paper/presentation that was also awarded the best student paper award

Jaime Arguello, Fernando Diaz, Jamie Callan and Ben Carterette - Design and implementation of relevance assessments using crowdsourcing

Omar Alonso and Ricardo Baeza-Yates - The power of peers

Nick Craswell, Dennis Fetterly and Marc Najork - Automatic people tagging for expertise profiling in the enterprise

Pavel Serdyukov, Mike Taylor, Vishwa Vinary, Matthew Richardson and Ryen W. White - What makes re-finding information difficult? A study of email re-finding

David Elsweiler, Mark Baillie and Ian Ruthven

Of course, we also recommend our own paper, which was nominated for best paper award, and for which we received excellent feedback:

- Learning models for ranking aggregates

Craig Macdonald and Iadh Ounis

The program also featured a busy poster and demo session. We liked the work of Gerani Keikha, Carman and Crestani concerning identifying personal blogs using the TREC Blog track, and that of Perego, Silvestri and Tonellotto, which suggests that document length can be quantized from docids without loss of retrieval effectiveness. There were also several interesting demos that caught our eye:

- ARES - A retrieval engine based on sentiments: Sentiment-based search result annotation and diversification - which used our xQuAD framework for diversifying sentiments

Gianluca Demartini - Conversation Retrieval from Twitter

Matteo Magnani, Danilo Montesi, Gabriele Nnziante and Luca Rossi - Finding Useful Users on Twitter: Twittomender the Followee Recommender - addressed the Who to Follow (WTF?) task on Twitter

John Hannon, Kevin McCarthy and Barry Smyth

The ECIR organisers hosted a particularly sumptuous conference banquet at the impressive, unique and beautiful venue of The Village at Lyons Demesne in County Kildare. The journey to the village was a welcome break from the hotel setting of the conference and its technical program.

On the last day of the conference, and concurrently to the technical research sessions, an Industry Day event was under way. However, we only had the chance to go and see the excellent talk by Flavio Junqueira on the practical aspects of caching in search engine deployments. There is a comprehensive summary of the whole Industry program in this blog post. We believe that the planning of the Industry Day event in parallel to the technical sessions was detrimental to attendance. Next year, the Industry Day will be held after the conference ends.

Finally, we would like to thank the organisers of ECIR 2011 for a very enjoyable conference, and a great stay in Dublin. ECIR 2012 will be held in Barcelona, Spain, between 1st and 5th April 2012. We hope to see you all there.